AI Agent Builder

The building of an AI agent.

Project Overview

At the time of this project, the team had already implemented an early release, AI Agent

builder in Workflows. This version of the builder had been released with the expectation

that it would only be used by consultants. As a result, the usability and presentation of

the product was underwhelming and difficult to use. The developers couldn’t support any

additional functionality in the Agent Builder due to time constraint, so the goal of this

project was to improve the usability from a purely design interface and presentation

perspective.

Our long term goal for the Agent Builder was to allow access outside of Workflows, which

informed and influenced this project re-design.

Year:

2025

Original Timeline:

approx. 6 months

Re-design Timeline:

approx. 2 weeks

My Role

Even before this project began, I owned a large portion of the design research process and

provided early exploration of key concepts. I created multiple low and high fidelity

wireframes and prototypes to communicate these different approaches. I also provided weekly

email updates to leadership about my progress and findings.

Prior to this

re-design, I compiled some of my research into a slide deck to highlight key usability

concerns and compare our initial Agent Builder to industry standards. I collaborated with

our Chief of Design Officer and provided this deck to leadership to raise awareness and

generate support for the following re-design. View PDF

During the re-design, I worked closely with developer leads and project managers to align

efforts and advocate for an easy to use, product that could integrate with our existing

automation tool.

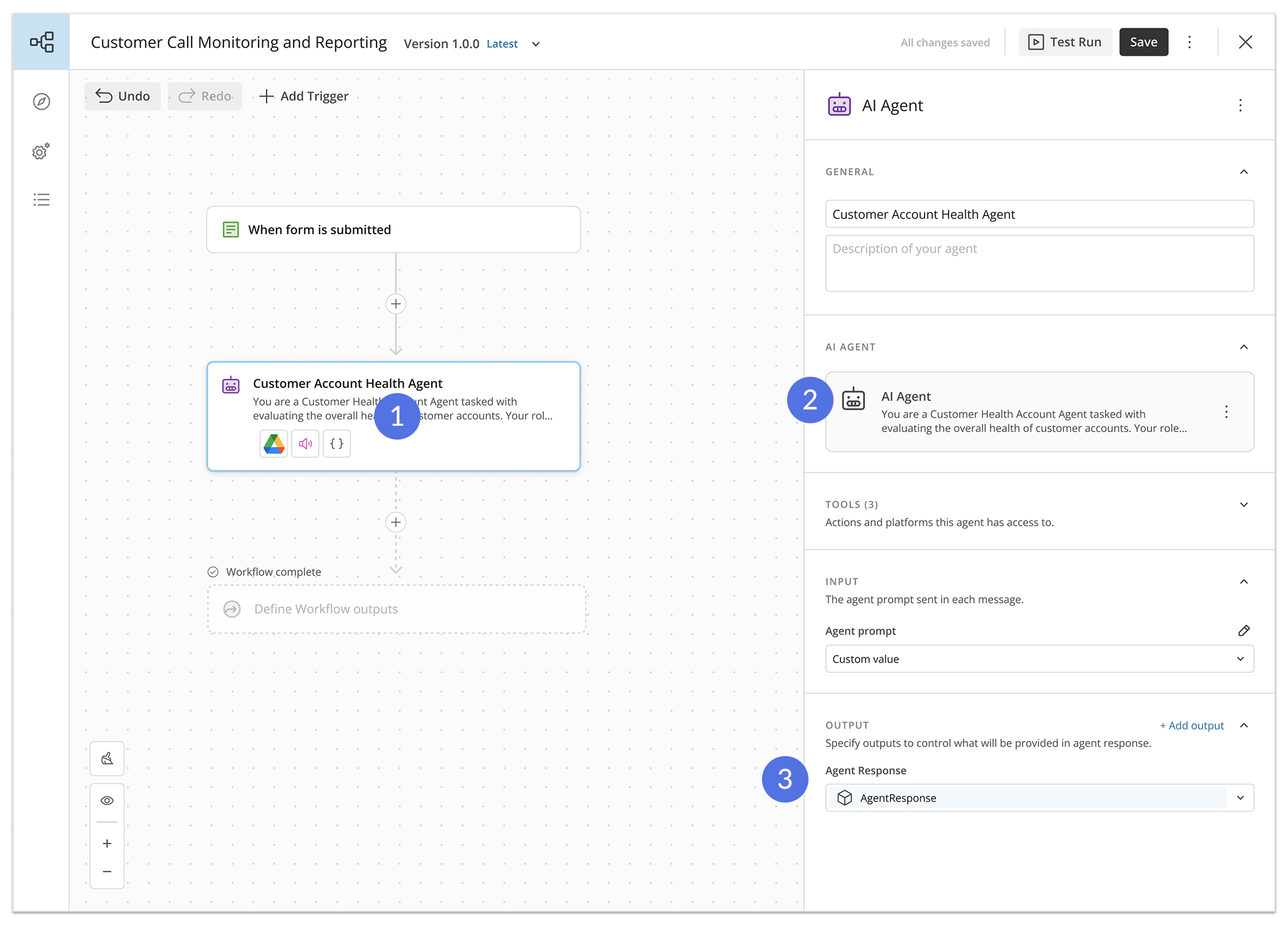

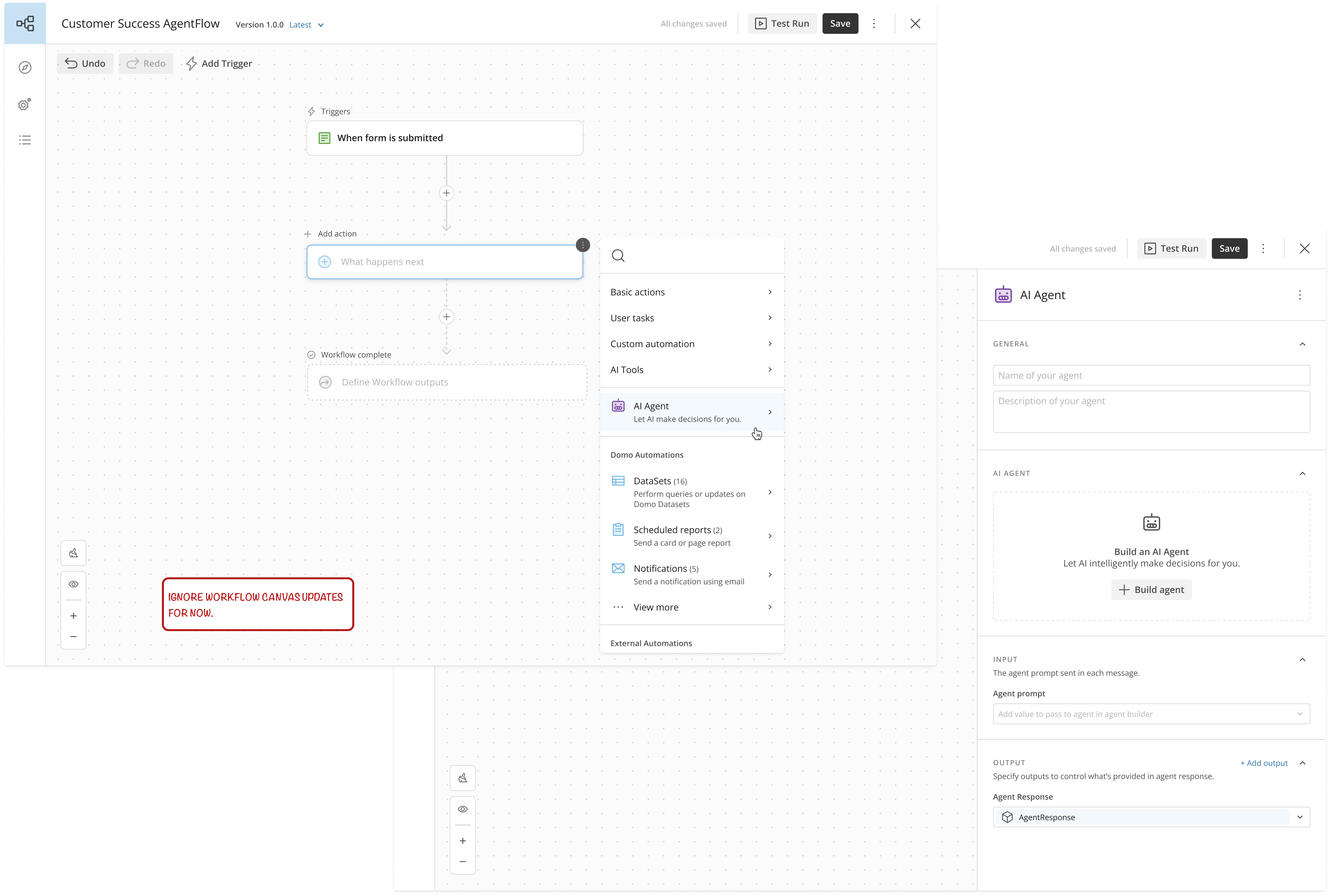

Agents within Workflows

From the workflow canvas, users would select to add an ‘AI Agent’. Once this action was added, a detail panel would appear on the right hand side of the canvas. Selecting ‘Build agent’ would then launch the Agent Building experience.

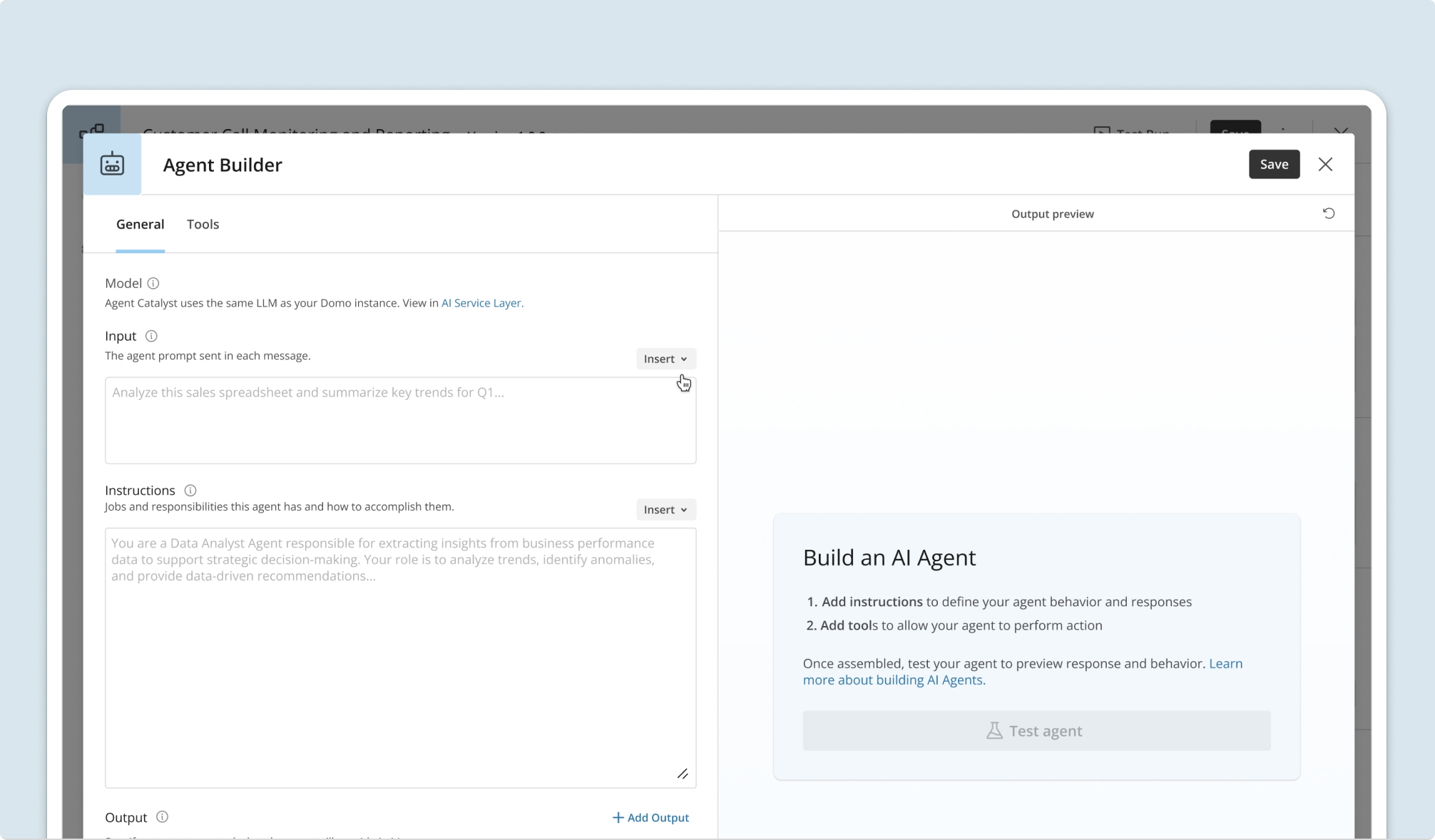

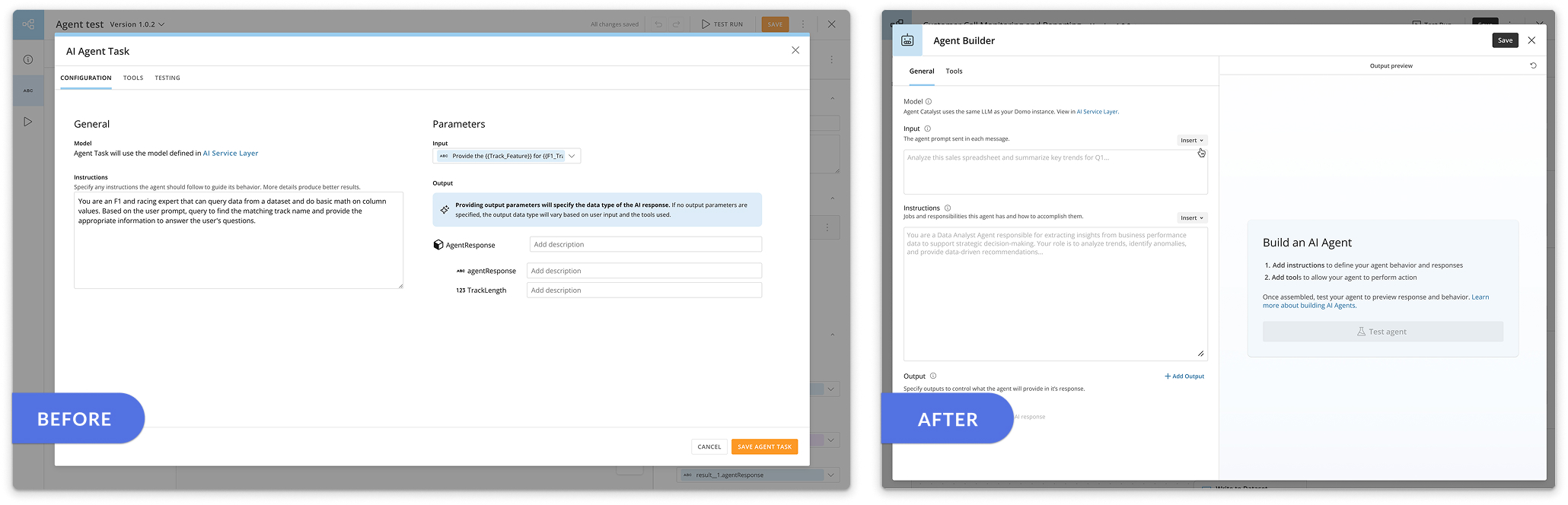

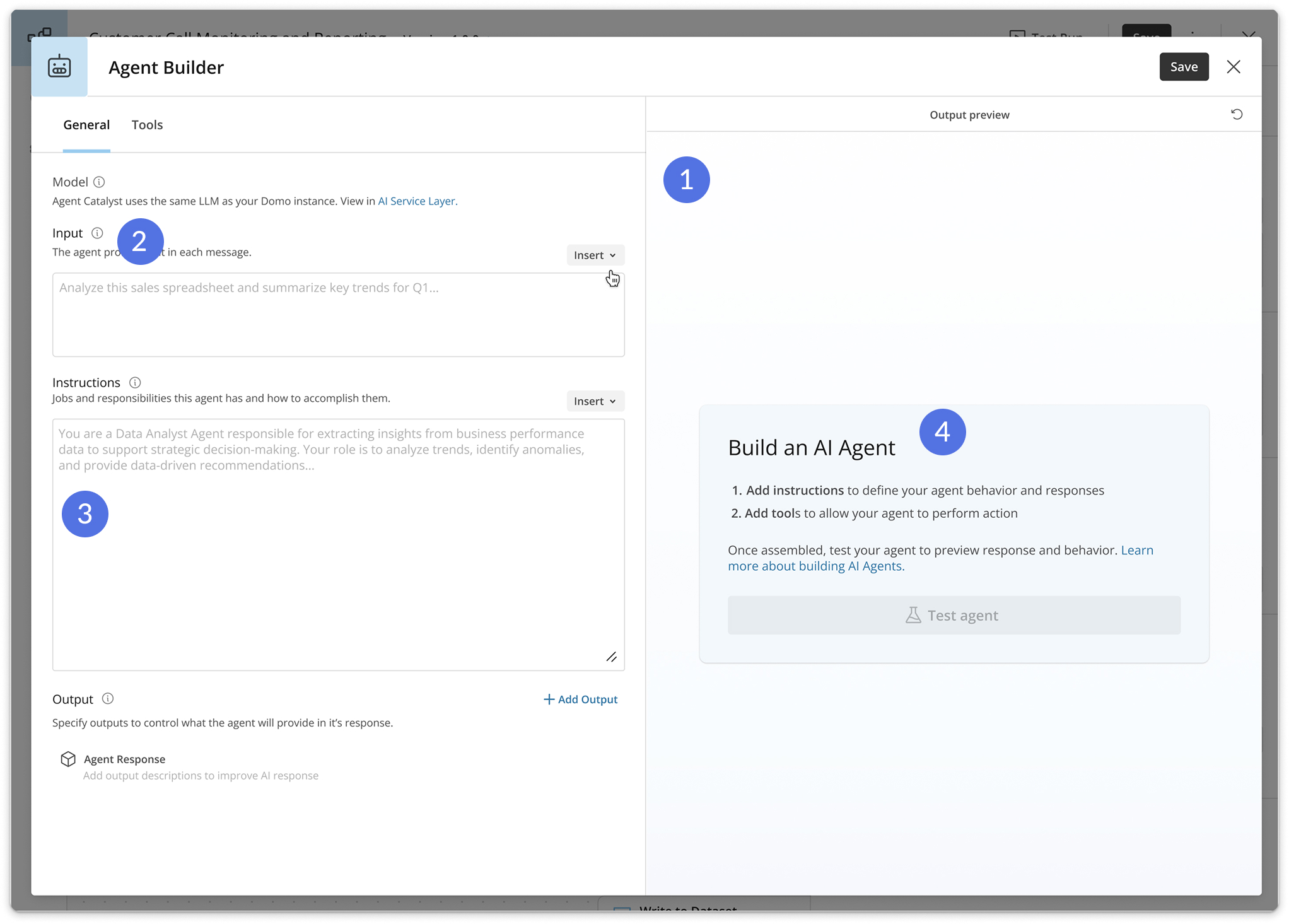

New Layout

We had advertised in our marketing (and research supported) that the key aspects of creating an agent were selecting an LLM, providing instructions, adding tools and supplying knowledge sources. In addition to making these more obvious, improvements included:

- Side by side configuration and testing. This allowed users to quickly edit and test changes.

- More natural layout of ‘input’, ‘instructions’ and ‘outputs’ in that order.

- Improved help text and the inclusion of shadow text to provide examples of what was expected.

- Instructions on how to build an agent highlighted on the right.

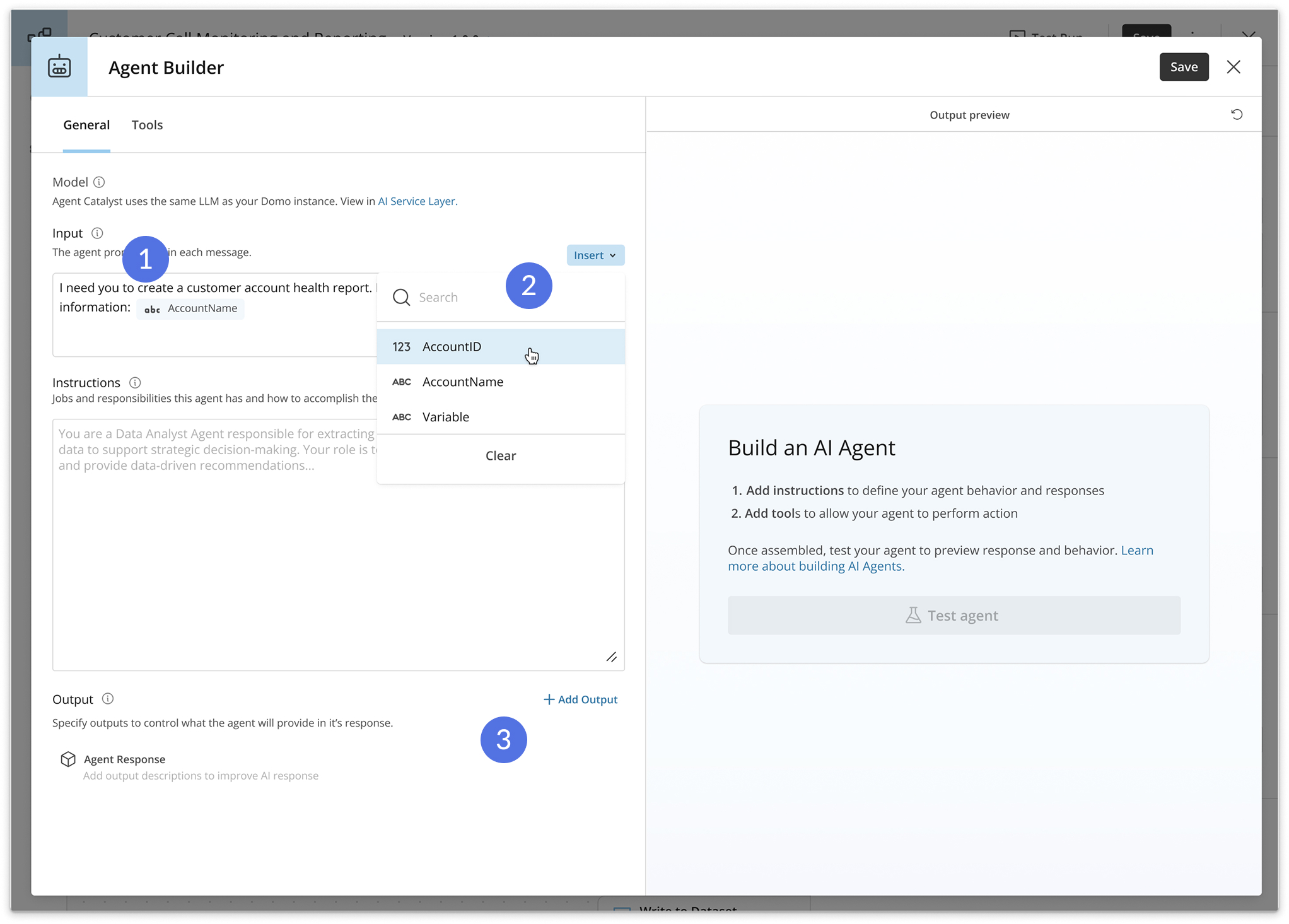

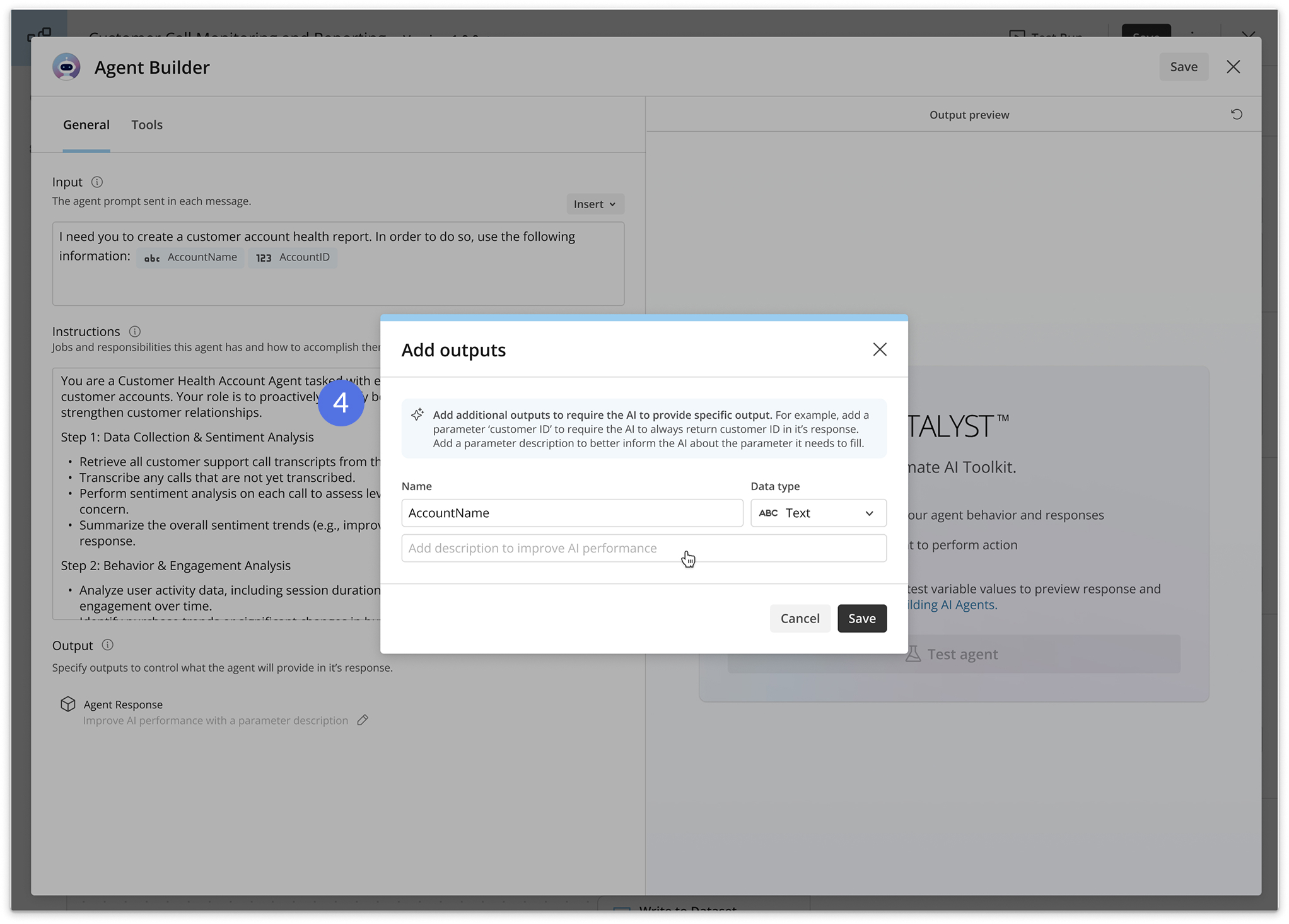

Agent inputs and outputs

By default, the agent builder would provide a output of ‘agent response’ which was then linked to a variable in Workflows. Output descriptions were an important part of informing the AI of what information it should fill these outputs with. To better illustrate this, I made the following changes:

- Simplified language around agent outputs.

- Allowing users to insert variables without entering a modal.

- ‘Add output’ would open a modal to add outputs and their descriptions individually. This both visually simplified the outputs creation experience and encouraged users to provide descriptions when new outputs where created.

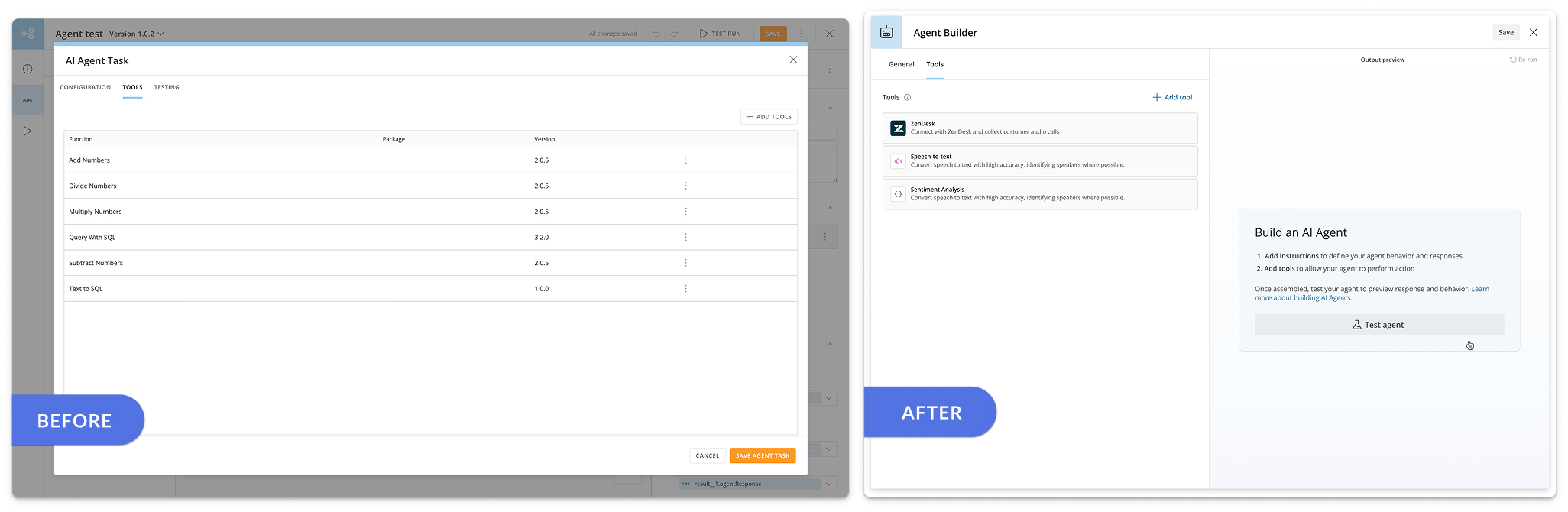

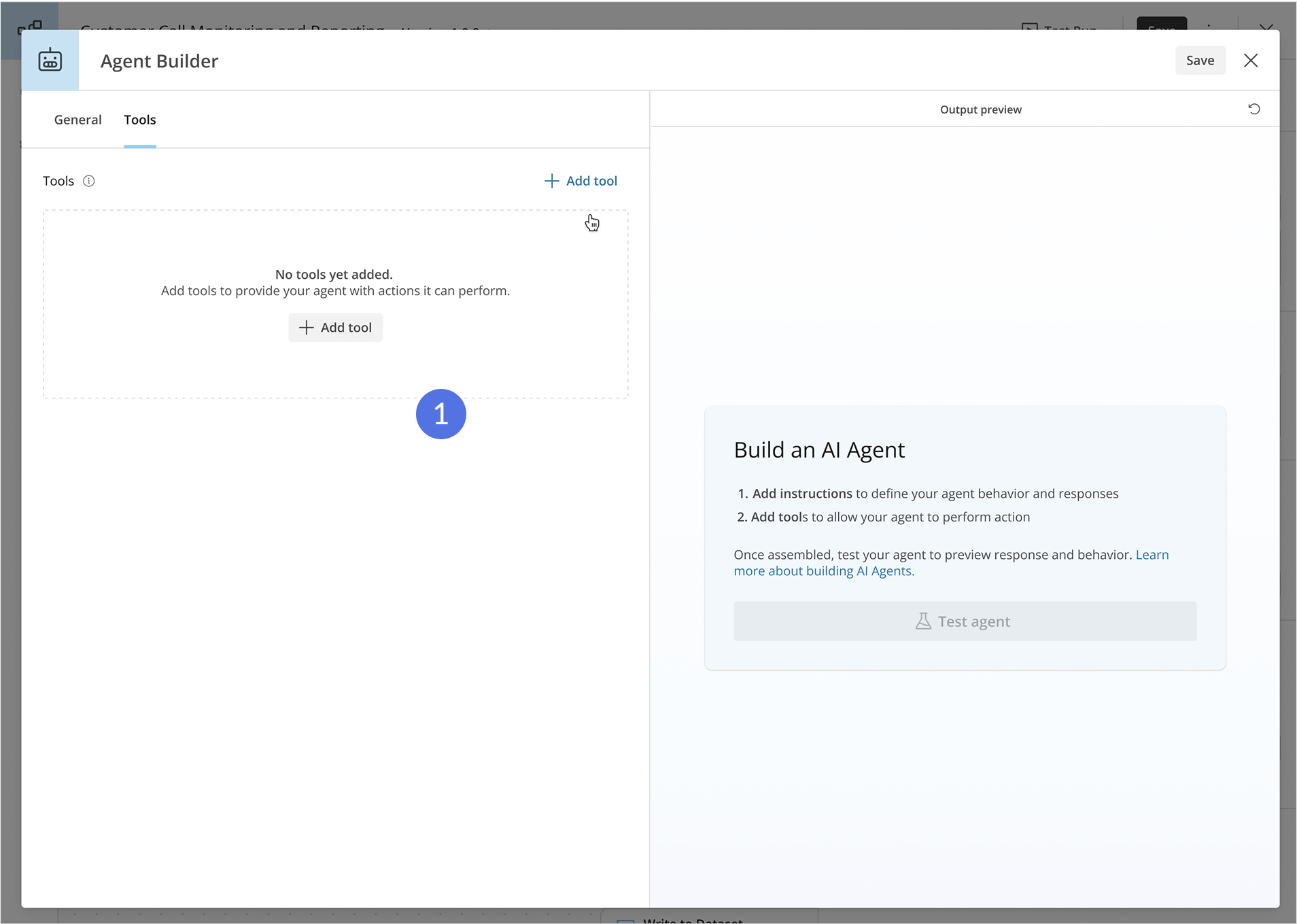

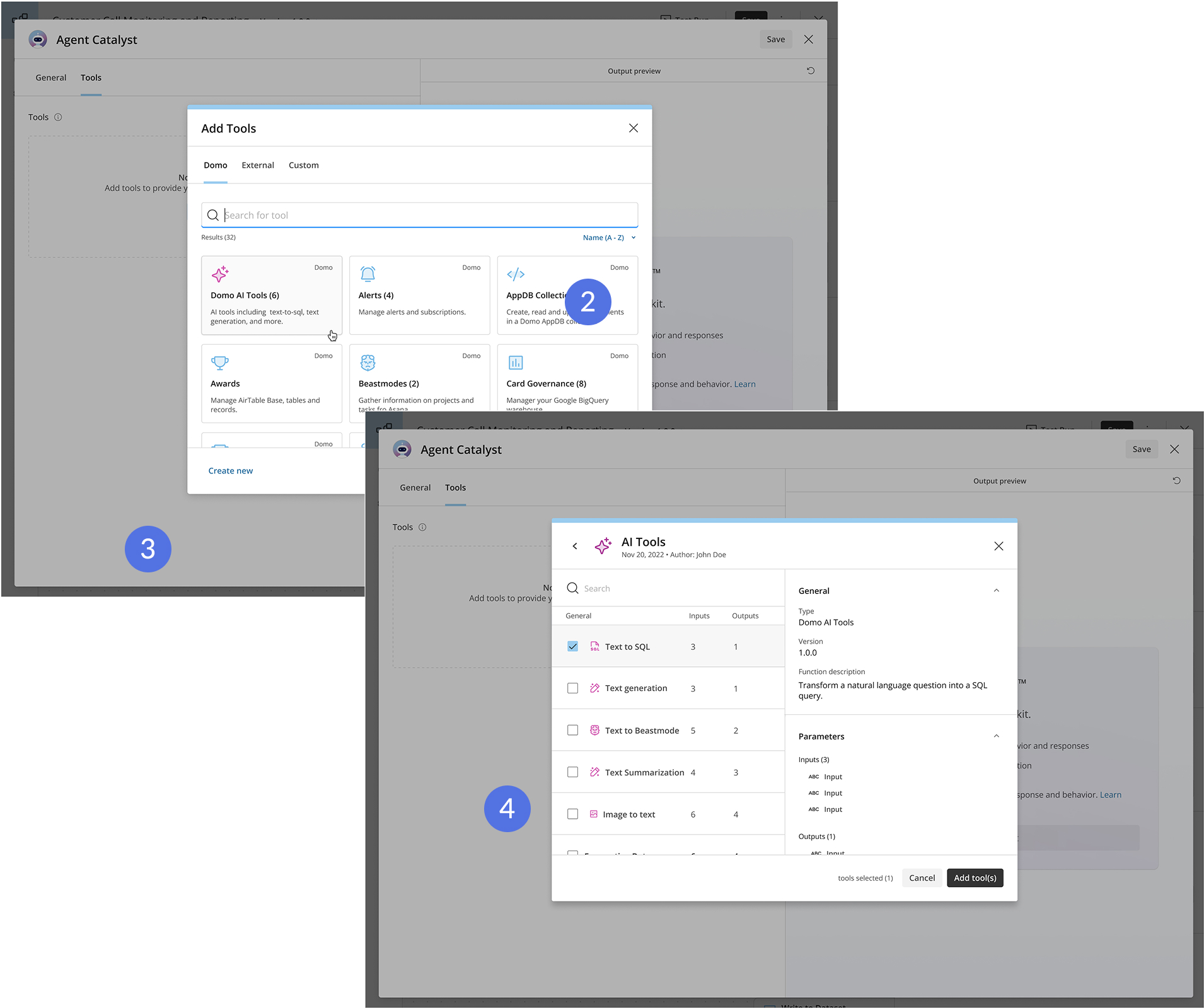

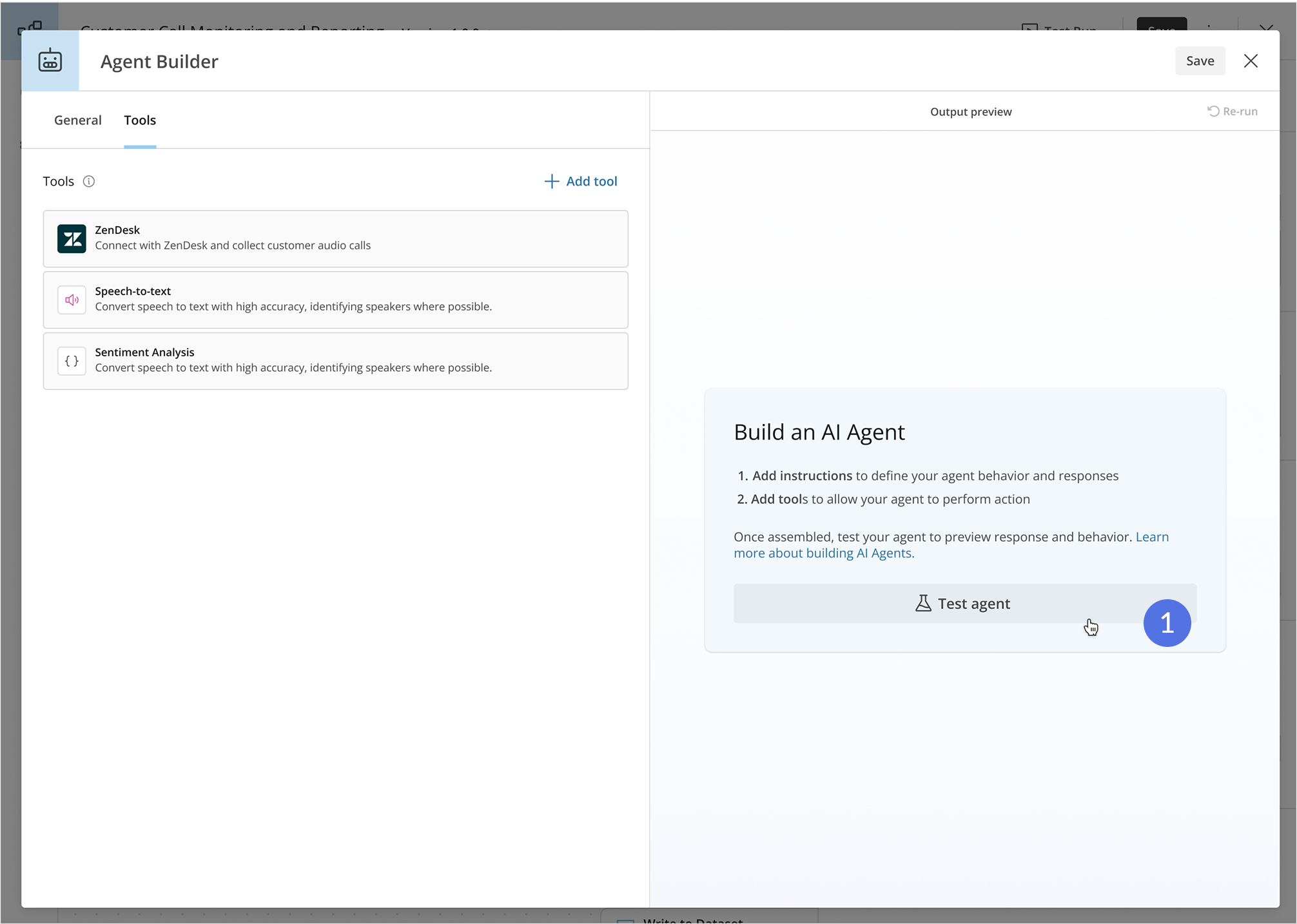

Providing Tools

In the previous experience users would accidentally close out of the builder while trying to close only the tool modal. The tools also all looked the same providing little visual differentiation. Tool descriptions where also always visible, causing confusing on whether they were required or not.

- Improved zero state before tools have been added.

- Moved the tool modal into a smaller modal within the agent builder modal.

- Added tool icons to visual see the difference between tool types.

- Maintained support for multi-selecting tools.

- Descriptions would appear as they have elsewhere in the product for visual familiarity.

In addition to these changes, we also provided descriptions for all Domo provided tools. This meant that users would need to be bothered with descriptions unless they were using a custom tool. Custom tools indicated they needed descriptions once the tool had been added.

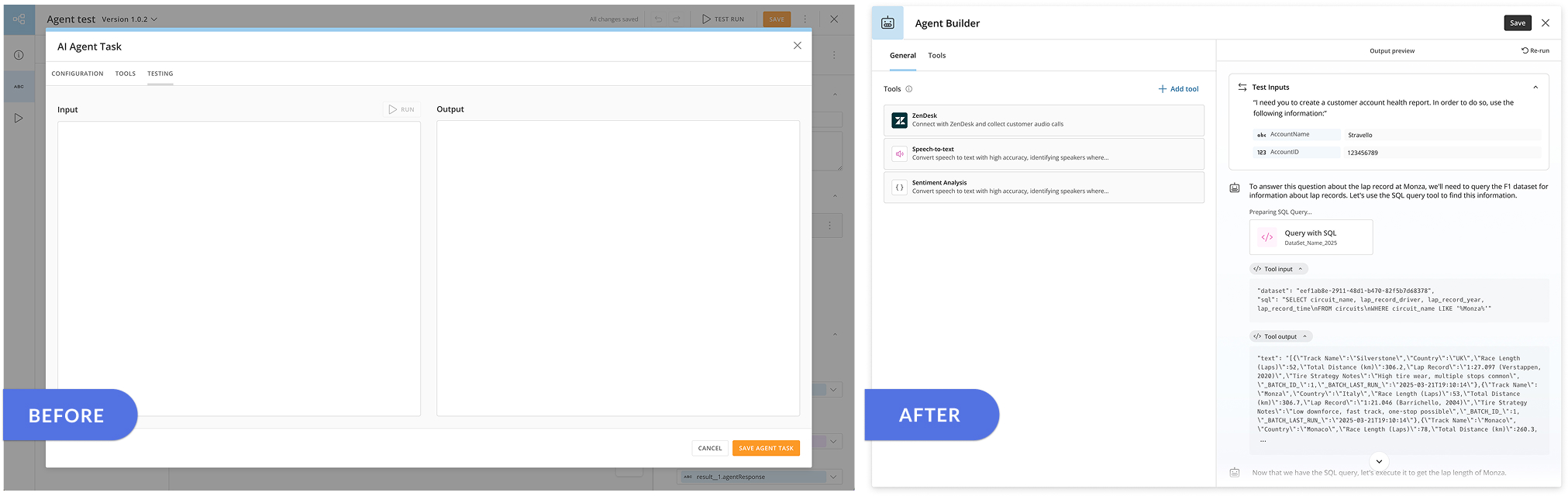

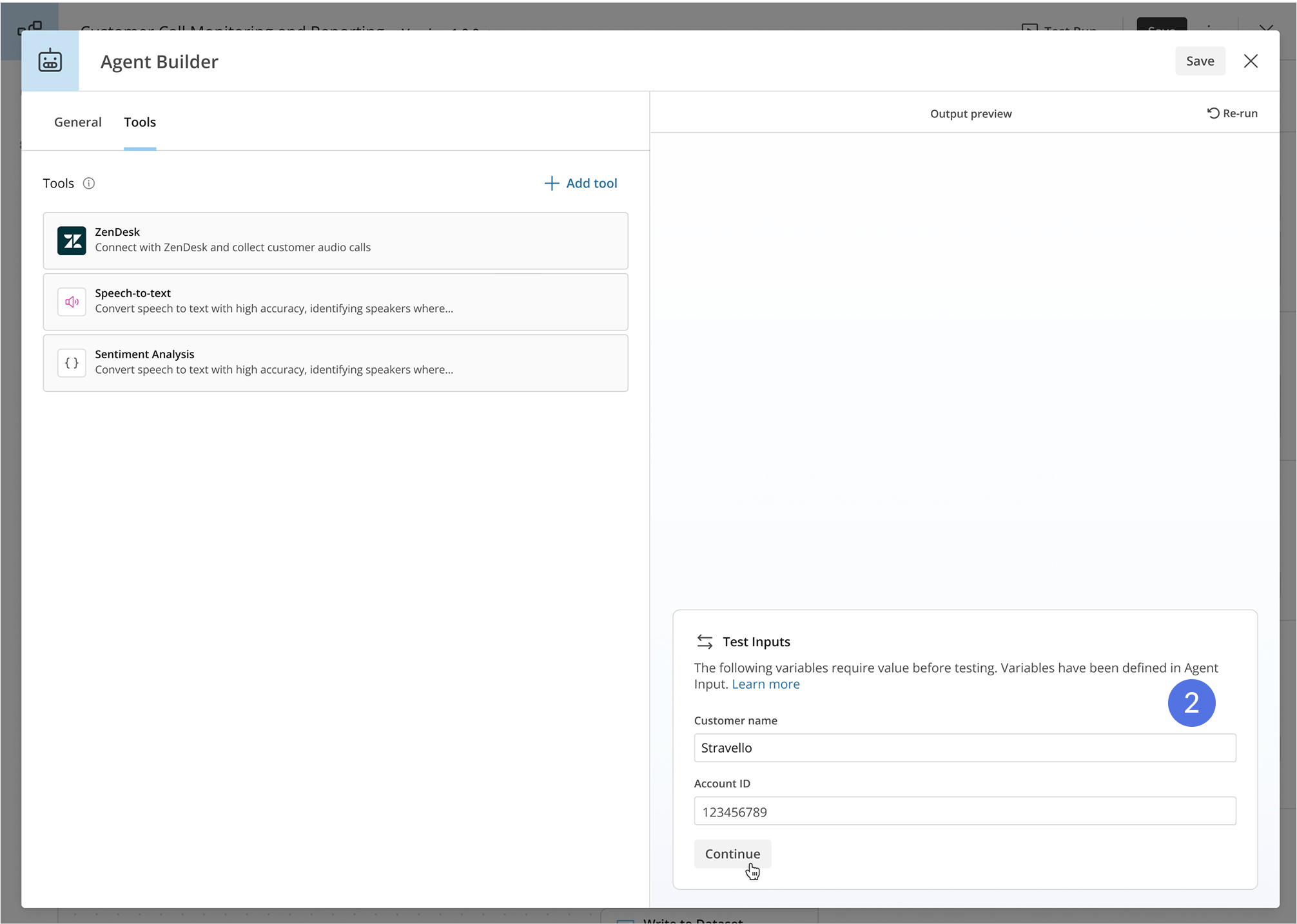

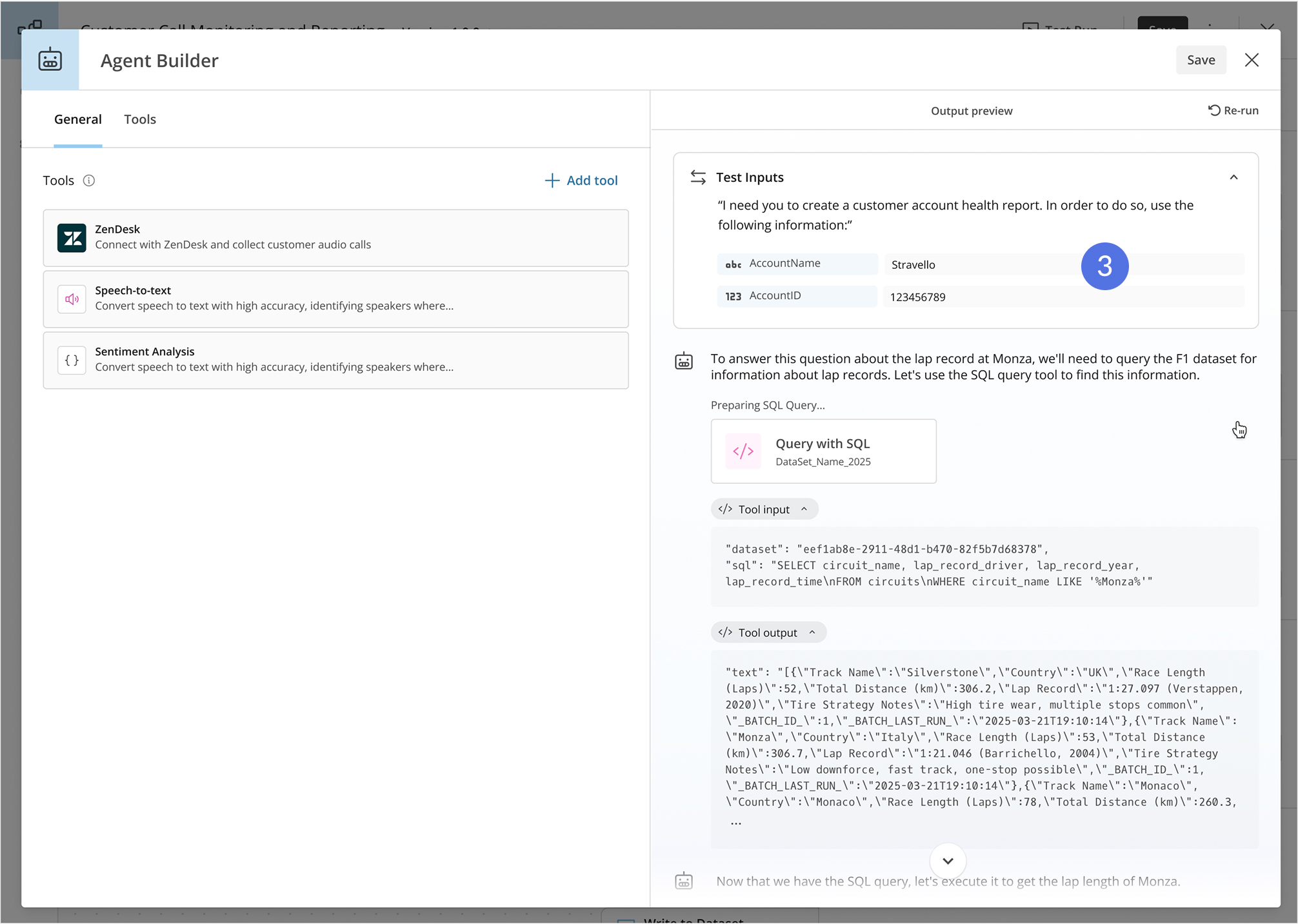

Testing the agent

There had been a disconnect for users in what the agent was testing. Agent input was separated from the testing screen, and users were even prompted to fill in a new input. In addition, they cold test at any time, resulting in failed tests. To solve this, I made the following changes:

- Testing disabled until tools, prompt, and instructions had been added.

- If variables had been used in the input prompt, values for those variables were requested before testing.

- If no variables had been used, the test would run with the with the input prompt displayed at the top. This allowed users to connect the input prompt with the test and test result.

Back in the Workflow canvas

Prior to the re-design, users would only see an agent icon and agent name on the workflow canvas. Re-design changes I made included:

- Users could see the tools the agent had access to in both the action shape and detail panel.

- A preview of the instructions was also viewable from both places.

- All inputs and output would be automatically mapped, no longer requiring the user to understand more technical aspects of Workflows.